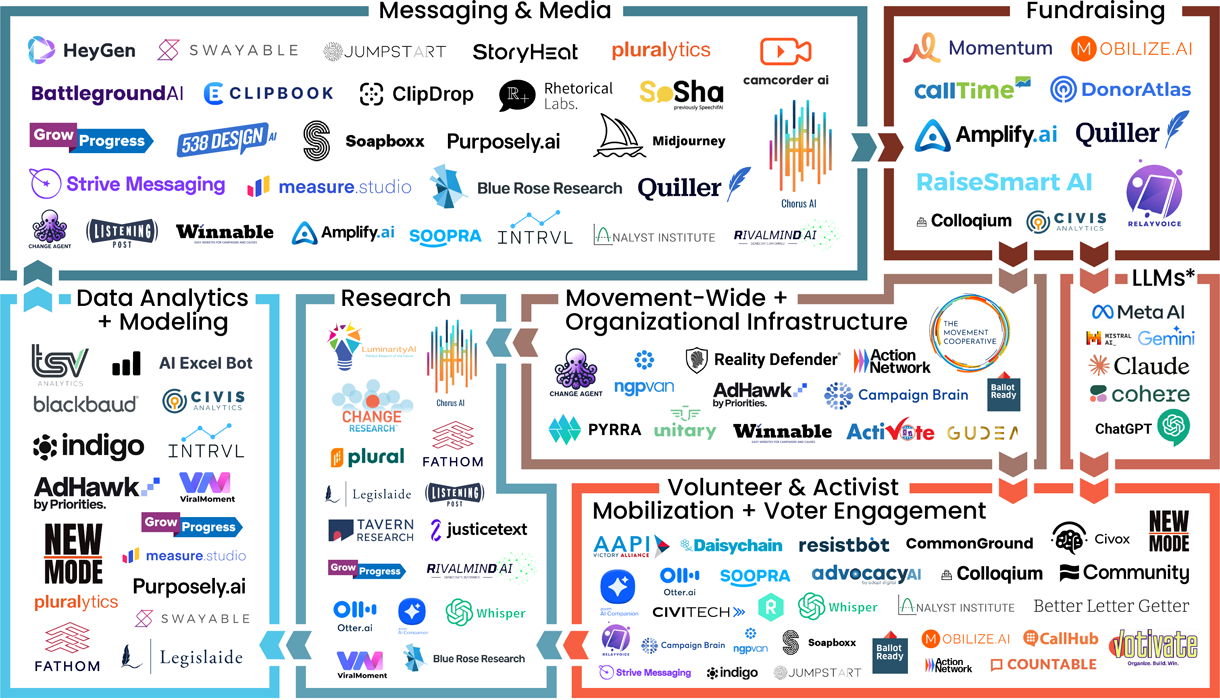

Generative AI has the potential to be one of the most transformative technologies of our lifetimes. Every industry is grappling with its impacts and how to prepare. Politics is no different.

This is why we are publishing this special edition of our annual Political Tech Landscape Report, focused entirely on the emerging use cases, needs, gaps, and opportunities presented by the introduction of generative AI in politics.

AI is moving quickly and changing every day. Headlines about bad actors using deepfakes or spreading misinformation are rightfully generating concern. For organizational leaders, weighing the technology’s opportunities and risks and incorporating AI into their workflows is overwhelming—yet we have no choice. Since its launch in late 2022, ChatGPT has experienced faster growth than TikTok, Spotify, and YouTube combined. Choosing not to engage with this technology while the opposition, other industries, and voters adopt AI is to risk lagging behind.

Read the full downloadable Landscape Report to explore the key political AI developments from 2024.

In the research process, we wanted to approach AI like any other tool to judge what makes it effective or dangerous. How is it being used? Who is using it? What are the norms of what’s acceptable? Where are opportunities for innovation? This report—the first of its kind—aims to explore these questions and outline how the AI political tech landscape is beginning to take shape.

Our research confirms that we’re still in the experimentation stage with AI. The technology itself is still quite nascent, yet generative AI has the potential to help your team run smoother, faster, and more efficiently today. Training is a key piece for the responsible utilization of AI, ensuring that human review is integral to any process involving AI.

It’s natural to be wary of experimenting, especially in politics—where the stakes are high and time and resources are short. We hope you find this report informative and that it generates ideas on how you might leverage AI in your own work.

How we experiment with AI now will shape how we invest in and develop this technology for the next decade. Together, we can take the first step towards unlocking new potential for engaging volunteers, reaching new voters, and getting insights that were previously out of reach—keeping our campaigns on the cutting edge of how we win.

The Executive Summary

Campaigns are leveraging AI for efficiency, but human oversight remains crucial

Campaigns and organizations are leveraging AI to improve the efficiency and speed of content creation, data analysis, and communication. However, human oversight remains critical to ensure quality, accuracy, and ethical use.

Rising AI use in political communications

AI-generated content is becoming most prevalent in political communications, particularly in content generation across images, video, audio, and text. Thus far, AI-generated content has generally performed on par with content created entirely by humans, matching performance as often as not. Testing and maintaining human oversight remain crucial when utilizing this emerging technology.

Political tech practitioners and technologists grapple with AI tool selection and development

Political tech practitioners and technologists must face a host of new factors when selecting and building AI tools. Accessibility, customization, privacy, and security considerations all play a role in organizations choosing between free, general-purpose AI tools or specialized, politics-focused offerings. Politics-centric AI applications are also subject to the discretion of major AI providers, who may modify their Terms of Service to limit the usage of their tools in political contexts.

Ongoing challenges countering AI-driven disinformation and deepfakes

Concerns around AI-generated misinformation, disinformation, and deepfakes are prompting regulatory actions from governments and tech companies. The effectiveness of these policies at curbing abuse by bad actors remains unclear, underscoring the need for increased vigilance and education around the responsible use of AI in political contexts.

Organizations start to prepare themselves for the responsible use of AI

Internal training, policies, and frameworks are critical to guiding the safe, ethical, and responsible use of AI, especially given the accessibility of free generative AI tools. While some organizations are experimenting with internal policies that balance innovation with considerations around data privacy, security, and workers’ rights, such practices remain uncommon.

We hope this resource offers insights into the progressive technology landscape that will aid you in the work ahead. A big thank you to the amazing team of researchers who crafted this report and the contributors who shared their experiences.